Disaster Recovery for Pooled Desktops

Pool desktops are nonpersistent desktops, and that’s the reason we must use FSLogix to store the profile on a remote file share/storage. Since we already learned about all the pooled desktop concepts, you know that there are multiple resources we create as part of the pooled desktop creation, and you must plan disaster recovery for all the required resources.

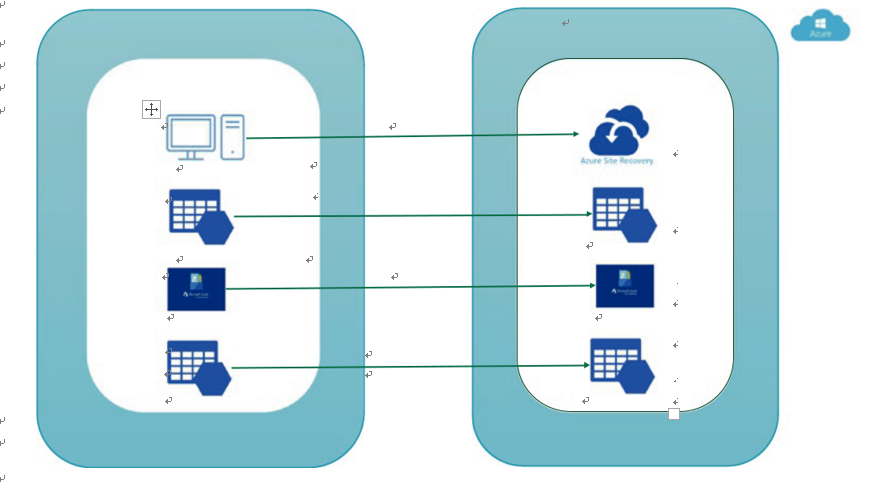

The following are the resources of a pooled desktop, and we will see how we can plan

DR for each resource (see Figure 10-2):

–– Pooled user profile (DFS file share/Azure File Share Storage/Azure NetApp)

–– Pooled host pool session host

–– Pooled image

–– MSIX package storage account

–– On-premises connectivity and authentication

Figure 10-2. Disaster recovery for pooled desktop

As you know, user profile storage is the most important resource in a pooled desktop, and it keeps all user profile data on a remote file share. There are multiple options to store user profiles like DFS file shares or Azure storage or Azure NetApp, and each resource has different options for DR.

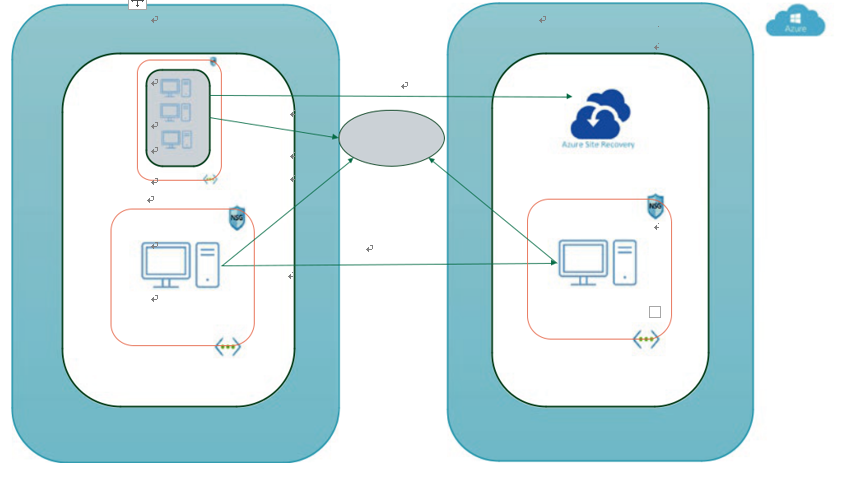

–– DFS/Windows file share: If you are using a DFS file share, then you must configure a file share replica on the file server in the DR region, and DFS will take care of the file replication to this DR region. Since you will be using a common workspace name, the DFS file share will be available with the same share name (workspace) from the DR region in the case of a disaster in the primary region. See Figure 10-3.

Figure 10-3. Disaster recovery for pooled desktop with DFS

Windows stand-alone file shares are a not recommended solution for a Azure Virtual Desktop user profile, and I don’t see any reason to use this option as we have much better options available for user profile storage. Anyway, if you are using a stand-alone file share, then you should know that it does not allow the replication feature, and you have to use robocopy/PowerShell to copy files to the file share in another region as well as manage the file share DNS names so that you don’t have to change the FSLogix user profile registry every time.

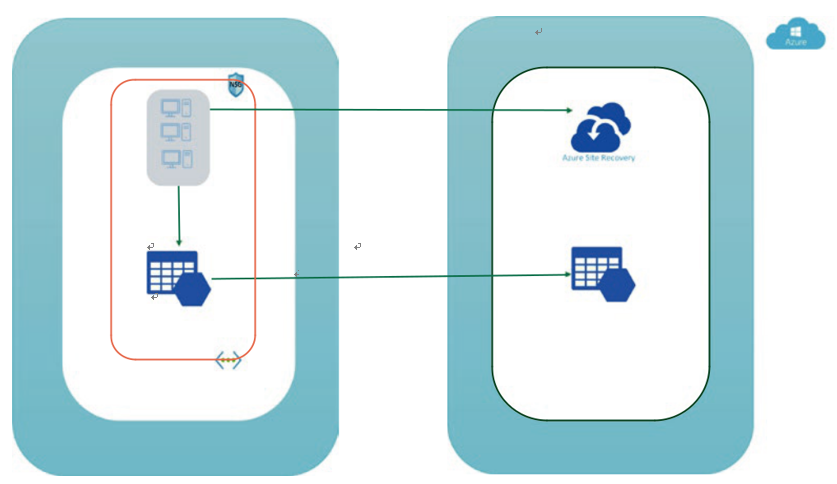

–– Azure File Share storage: File share storage is the most often used solution for the FSLogix user profile because it’s Azure native and easy to integrate with other services like Azure AD, ADDS, and a private endpoint with a virtual network. There are two options in Azure storage: premium and standard storage.

Standard storage: This comes with replication and a failover option to another region, but this is not recommended for a production workload because it can impact AVD performance due to limited IOPS on the standard storage. Premium storage provides more IOPS compared to standard storage, but if you are using a standard storage

account, then you can go ahead and enable replication to another region. In the case of disaster, you can set the failover storage account to another region. Refer to Chapter 5 for more detailed steps about the FSLogix VHDLocation implementation. See Figure 10-4.

Figure 10-4. Disaster recovery for pooled user profile

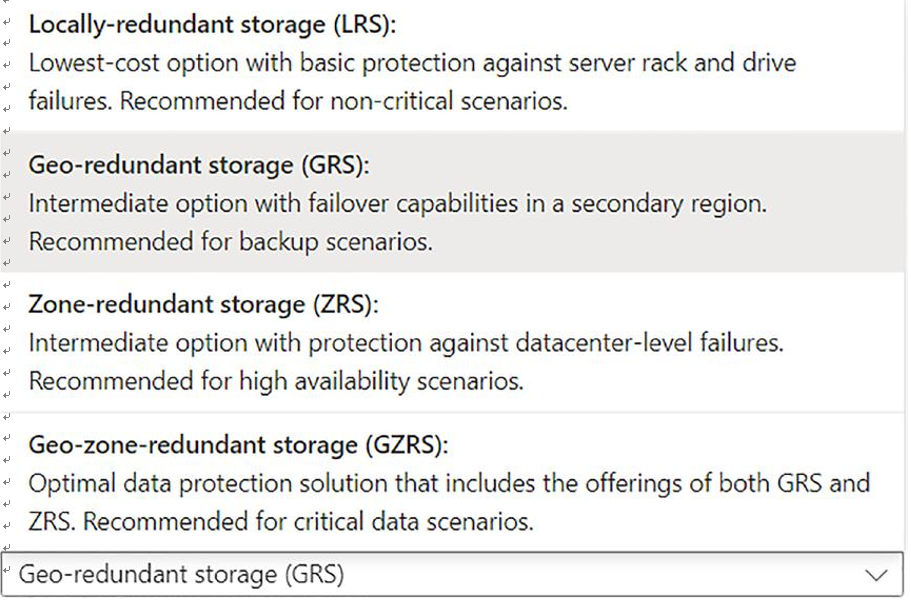

Make sure you are setting the redundancy to GRS or ZGRS if you want to use standard storage so it will allow you to enable georeplication. See Figure 10-5.

Figure 10-5. Pooled user profile storage account creation

Figure 10-6 shows the redundancy options available for a standard storage account.

Figure 10-6. Storage redundancy

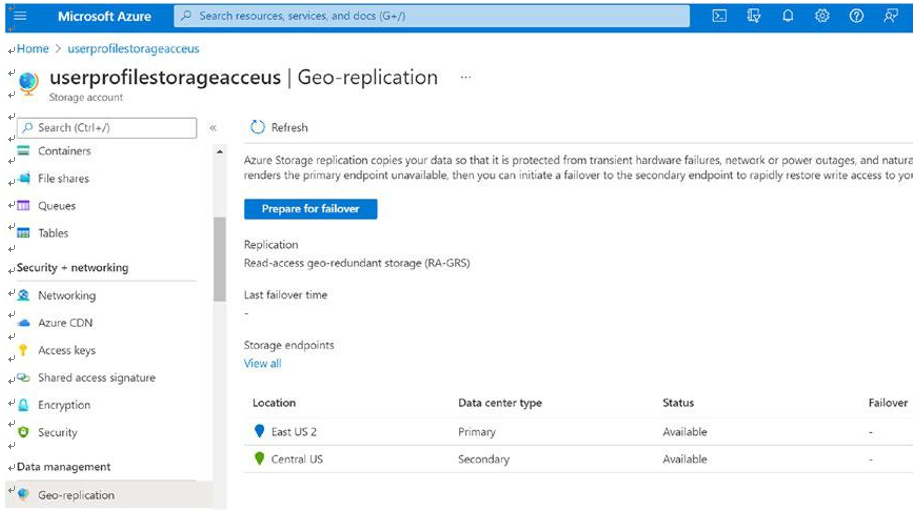

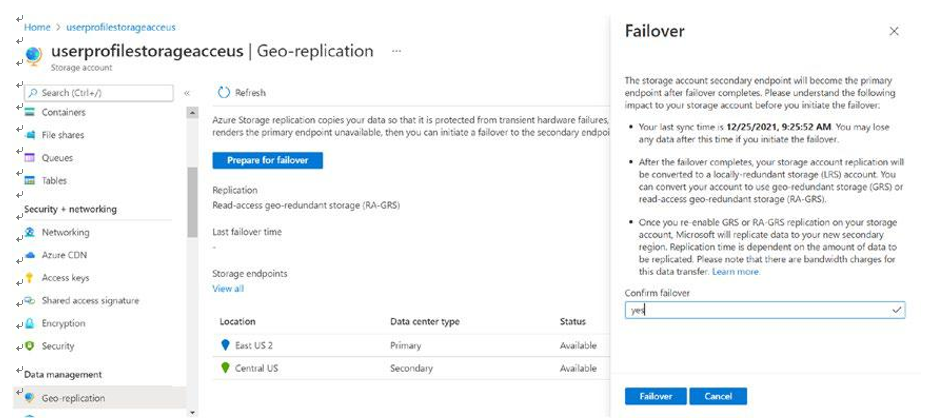

You can see the georeplication by going into the georeplication option in the user profile storage account. You will be able to see the replication status as the data center type (primary/secondary), and you can do failover in case of a disaster by clicking “Prepare for failover.” See Figure 10-7 and Figure 10-8.

Figure 10-7. Storage georeplication

Figure 10-8. Storage georeplication and failover

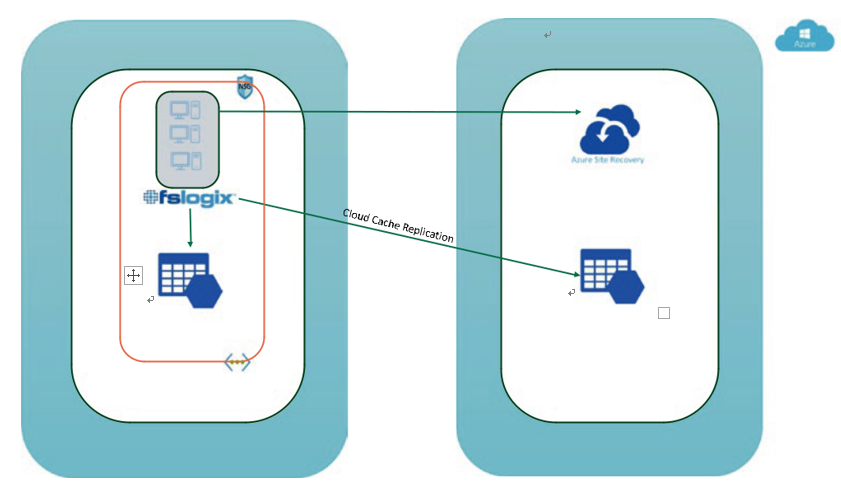

Premium storage does not allow for replication, but since premium storage is recommended for production workloads, you can use the FSLogix cloud cache, which will allow you to replicate the user profile to multiple storage accounts in a different region. By doing this you will be able to use the secondary storage account automatically

if the primary storage account is not accessible/available. Primary and secondary storage are both dependent on the sequencing in the CCDLocation registry key, and both the storage accounts will be in sync. A CCDLocation overrides a VHDLocation, if they are both set.

The main advantage of using a cloud cache with premium storage is that you will get a high IOPS storage, which is recommended for production workloads; in addition, you will have profile data in two regions, and no manual intervention is required for failover or failback for the user profile in the case of a disaster in the primary region. See Figure 10-9.

Figure 10-9. FSLogix cloud cache

In Figure 10-9 you can see that FSLogix is syncing the user profile data to both storage accounts using a cloud cache. Refer to Chapter 6 for more details about the cloud cache.

Azure NetApp also has a disaster recovery option, and you can easily configure replica in a supported cross region. The Azure NetApp Files replication functionality provides data protection through cross-region volume replication. You can asynchronously replicate data from an Azure NetApp Files volume (source) in one region to another Azure NetApp Files volume (destination) in another region. This capability enables you to fail over your critical application in the case of a region-wide outage or disaster. There are certain cross-region replication pairs to which you can replicate a

NetApp file volume, so it’s recommended to check the Microsoft site for an updated list of supported cross-region replica pairs.

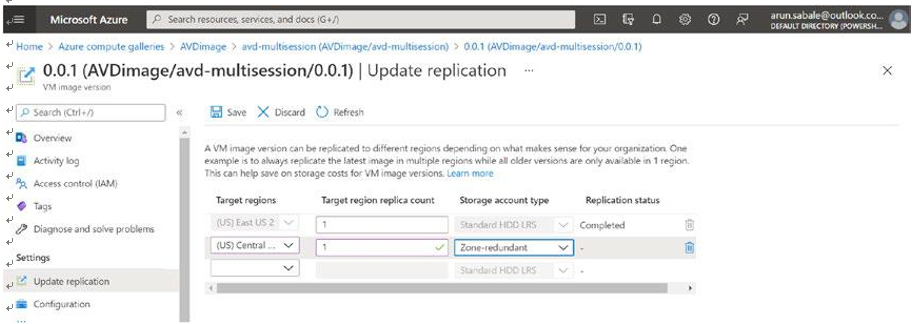

Another important resource you should consider in a DR plan is your golden image stored in the image gallery. The Azure image gallery has a replication future, which allows you to replicate images to a specific region and use the LRS/ZRS storage accounts to store the replicated image in a secondary region. Refer to Chapter 5 for more details. In the case of a disaster in a primary region, you don’t have to perform any action to fail over the images, and the image copy will be available in the secondary region to create a VM from. See Figure 10-10.

Figure 10-10. Image gallery replication